Build Your Own Google Glass

A wearable computer that displays information and records video

By ROD FURLAN / JANUARY 2013

Illustration: Jason Lee

Last April, Google announced Project Glass. Its goal is to build a wearable computer that records your perspective of the world and unobtrusively delivers information to you through a head-up display. With Glass, not only might I share fleeting moments with the people I love, I’d eventually be able to search my external visual memory to find my misplaced car keys. Sadly, there is no release date yet. A developer edition is planned for early this year at the disagreeable price of US $1500, for what is probably going to be an unfinished product. The final version isn’t due until 2014 at the earliest [see “Google Gets in Your Face,” in this issue].

Illustrations: Jason Lee

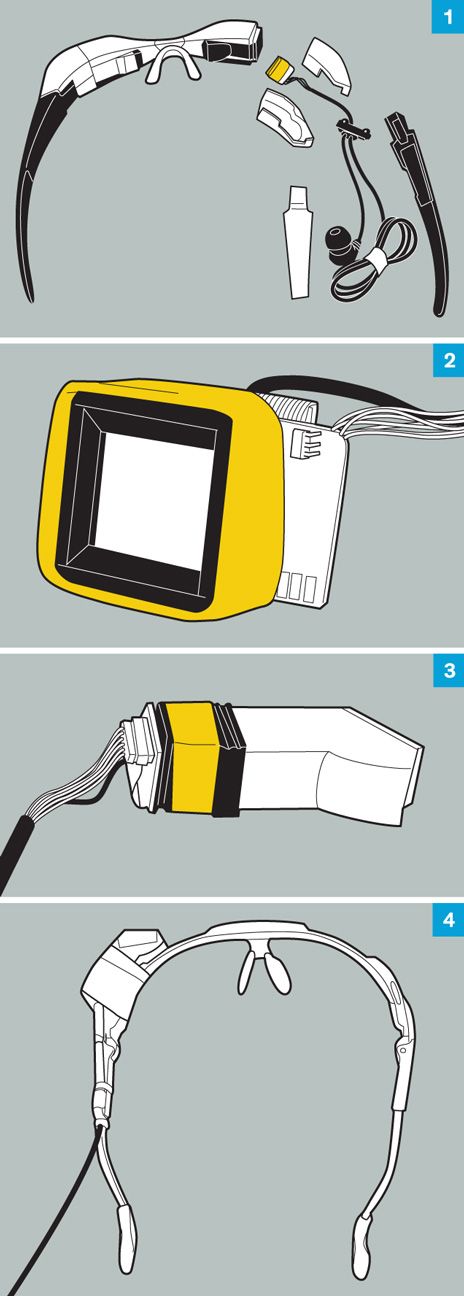

CREATIVE CANNIBALISM: The critical component for any Glass-type wearable computer is the microdisplay, which feeds information to the eye. I found a discontinued head-mounted display online and carefully disassembled it (1) to extract the microdisplay (2) and the optics (3) required to focus the image properly. I then mounted these components on a pair of plastic safety goggles (4). Click to enlarge.

But if Google is able to start developing such a device, it means that the components are now available and anyone should be able to follow suit. So I decided to do just that, even though I knew the final product wouldn’t be as sleek as Google’s and the software wouldn’t be as polished.

Most of the components required for a Glass-type system are very similar to what you can already find in a smartphone—processor, accelerometers, camera, network interfaces. The real challenge is to pack all those elements into a wearable system that can present images close to the eye.

I needed a microdisplay with a screen between 0.3 and 0.6 inches diagonally, and with a resolution of at least 320 by 240 pixels. Most microdisplays will take either a composite or VGA video input, the former being the easiest to work with. A quick search on the Alibabaglobal supply website returned several candidates; most suppliers will gladly fulfill orders for a single display and matching control electronics if you contact them directly. However, the corresponding optics for mounting these displays—which required them to be placed directly in front of the eye—were too bulky.

To build a sleek device, I needed to be able to mount the actual display on the side of the head and bring the image around to the eye. This setup is actually easy to make if you have the right equipment, which I don’t. Luckily, back in 2009, a company called Myvu (now out of business) sold a line of personal head-mounted video displays for iOS devices. Myvu’s products were sleek and small because they used a clever optical system alongside side-mounted screens.

I was able to procure a Myvu Crystal on eBay for just under $100. Within it I found several components needed for my wearable computer: optics, a 0.44-inch microdisplay, and a display controller capable of handling a composite video input. For the frame on which to mount the screen, I tried several kinds of safety goggles before settling on the ones that worked best.

Next, I needed an onboard computer. Since I was using a display controller that accepted only a composite video input, the obvious choice was a smartphone or programmable media player with an analog video output, such as an earlier model Apple iPhone or iPod Touch, or one of several Android phones. After considering the dimensions of all these systems, it was clear that having all the components head mounted (as with Google Glass) wasn’t a viable option, so the onboard computer became a separate component that would reside in a pocket and drive the microdisplay via a cable.

I settled on a fourth-generation iPod Touch. I had to “jailbreak” it, which eliminates limitations built into the iOS software by Apple. Once that was done, I could mirror the Touch’s main display to the microdisplay using its composite video output. This choice of onboard computer meant that for a point-of-view camera (used to record images and video), I needed one that could communicate via the iPod Touch’s Wi-Fi or Bluetooth wireless interfaces. I used a Looxcie Bluetooth camera, which is small enough to be mounted on the side of the frame once you strip it from its plastic shell; you can order it online for around $150. (I’m already building a second iteration of my prototype around a Raspberry Pi. This will allow more control over the camera than is currently possible with the iOS apps that work with the Looxcie and better integration of sensors such as accelerometers.)

My world changed the day I first wore my prototype. At first there was disappointment—my software was rudimentary, and the video cable running down to the onboard computer was a compromise I wasn’t particularly pleased with. Then there was discomfort, as I felt overwhelmed while trying to hold a conversation as information from the Internet (notifications, server statuses, stock prices, and messages) was streamed to me through the microdisplay. But when the batteries drained a few hours later and I took the prototype off, I had a feeling of loss. It was as if one of my senses had been taken away from me, which was something I certainly didn’t anticipate.

When I wear my prototype, I am connected to the world in a way that is quintessentially different from how I’m connected with my smartphone and computer. Our brains are eager to incorporate new streams of information into our mental model of the world. Once the initial period of adaptation is over, those augmented streams of information slowly fade into the background of our minds as conscious effort is replaced with subconscious monitoring.

The key insight I had while wearing my own version of Google Glass is that the true value of wearable point-of-view computing will not be in the initial goal of supporting augmented reality, which simply overlays information about the scene before the user. Instead, the greatest value will be in second-generation applications that provide total recall and augmented cognition. Imagine being able to call up (and share) everything you have ever seen, or read the transcripts for every conversation you ever had, alongside the names and faces of everyone you ever met. Imagine having supplemental contextual information relayed to you automatically so you could win any argument or impress your date.

Creating the software and hardware for such a “brain prosthesis” is certainly within the realm of possibility for the next decade, and I expect to see these features drive the mass adoption of the Google Glass technology.